Content

Master Data Extraction: Your 2024 Guide to Website Scrapers

The Ultimate 2024 Guide to Website Scrapers: Master Data Extraction

The digital world holds vast amounts of information.

Learning to extract this data can provide a significant advantage.

This guide will help you understand and use a website scraper effectively.

You will discover how to unlock valuable insights for various needs.

Understanding the Fundamentals of a Website Scraper

Data is the new gold in today's digital economy.

Businesses and individuals increasingly need to collect information from the web.

A website scraper automates this crucial process.

It helps you gather structured data from unstructured web pages.

What Exactly is a Website Scraper?

A website scraper is a software program.

This tool automatically extracts information from websites.

Think of it as a digital assistant that reads web pages for you.

It collects specific data points you define.

How a Website Scraper Extracts Information

A website scraper works by sending requests to web servers.

It then receives the HTML content of a page.

The program parses this content to find the desired data.

Finally, it saves the extracted information in a usable format like CSV or JSON.

Differentiating a Website Scraper from a Web Crawler

People often confuse a website scraper with a web crawler.

A web crawler, like Google's bot, explores the internet to index pages.

Its main goal is to discover new content and map website structures.

A website scraper, however, focuses on extracting specific data from known pages.

Key Benefits and Use Cases for Data Extraction Tools

Using such tools offers many advantages.

They can save countless hours of manual data collection.

This automation allows you to focus on analyzing the data, not just gathering it.

Let's explore some common applications.

Leveraging Data Extraction for Market Research

Market research benefits greatly from data extraction.

You can collect product prices, customer reviews, and trend data.

A scraping tool helps you understand market demand and competitive landscapes.

This information supports better business decisions.

Enhancing Lead Generation with a Scraping Tool

Lead generation is another powerful use case.

You can extract contact details, company information, and professional profiles.

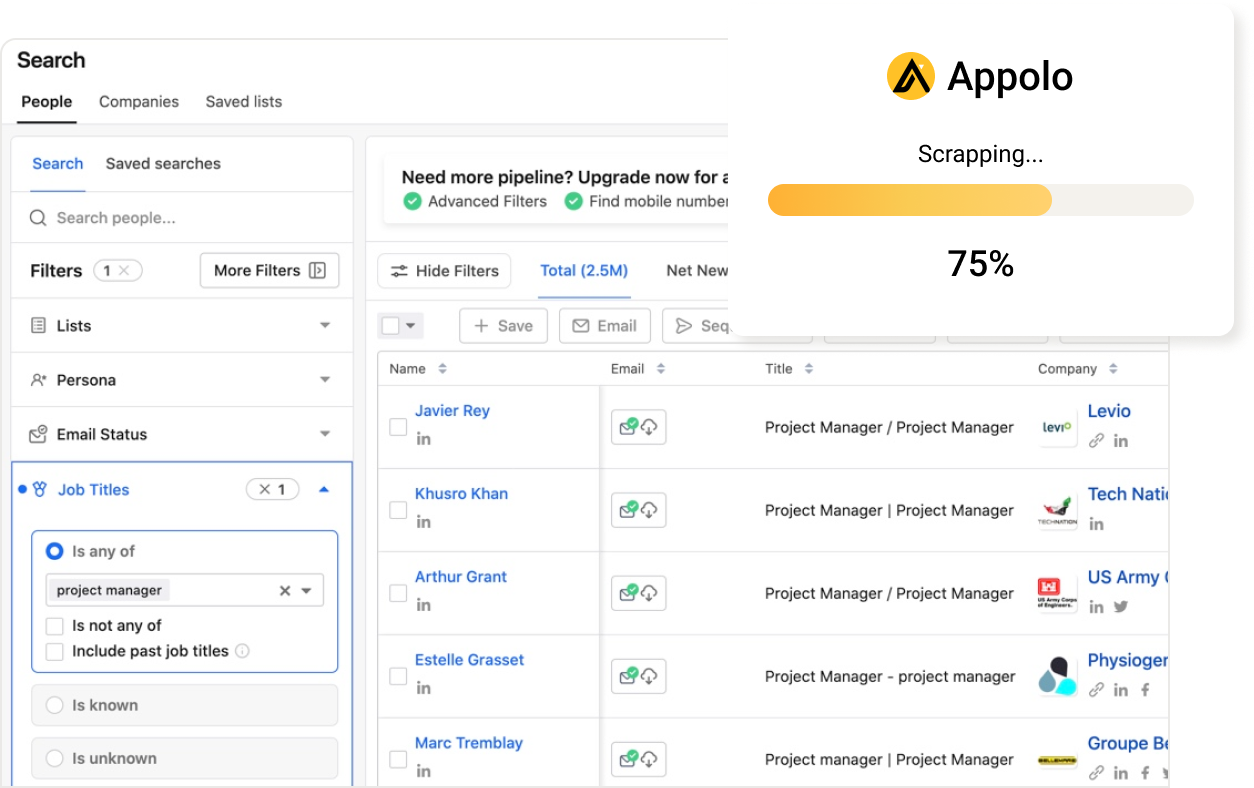

A specialized scraping tool like Scrupp can streamline this process significantly.

Scrupp integrates with platforms like LinkedIn and Apollo.io to gather verified emails and comprehensive data, boosting your sales and marketing efforts. Learn more about Scrupp's features.

It also supports CSV enrichment to enhance your existing contact lists. Discover Scrupp's capabilities here.

Scrupp provides a user-friendly design for efficient data extraction. Check Scrupp pricing plans.

Monitoring Competitors Using Website Scraping Software

Keeping an eye on competitors is vital for business strategy.

This software can track their pricing changes and product launches.

You can also monitor their new job postings or press releases.

This helps you stay competitive and adapt quickly.

Choosing the Best Data Extraction Tool for Your Needs

Selecting the right tool is essential for successful data extraction.

Many options exist, from simple browser extensions to complex software.

Your choice depends on your technical skills and project scope.

Consider your specific data requirements carefully.

Evaluating Free Website Scraper Options

Several free website scraper options are available.

These are great for beginners or small, one-off projects.

They often have limitations on usage, speed, or features.

Always review their terms and conditions before starting.

Essential Features of a Top Data Extraction Tool

A good data extraction tool should offer several key features.

Look for ease of use, robust error handling, and scheduling options.

The ability to export data in various formats is also crucial.

Consider tools that can handle dynamic websites and CAPTCHAs.

Here’s a table outlining important features:

| Feature | Description | Benefit |

|---|---|---|

| User Interface | Intuitive design, often drag-and-drop | Speeds up setup, reduces learning curve |

| Proxy Support | Ability to rotate IP addresses | Prevents IP blocks, maintains anonymity |

| Data Export Formats | CSV, JSON, Excel, XML | Flexibility for analysis and integration |

| Scheduling | Automate scraping at set intervals | Enables continuous monitoring of data |

| CAPTCHA Solving | Integrations or built-in solutions | Handles common website security measures |

Comparing Different Scraping Tool Solutions

The market offers a wide range of scraping tool solutions.

Some are cloud-based, requiring no local installation.

Others are desktop applications, offering more control.

Research user reviews and support quality before deciding on the best website scraping software for your needs.

Step-by-Step Guide to Using Your Data Extraction Tool Effectively

Once you choose your tool, the real work begins.

Effective data extraction requires careful planning and execution.

Follow these steps to maximize your success.

Even complex projects can be managed with a systematic approach.

Setting Up Your First Data Extraction Project

First, identify the website and the data you need.

Map out the website's structure and the specific elements to extract.

Configure your data extraction tool with the correct selectors.

Always start with a small test run to ensure everything works as expected.

Navigating Common Challenges with Data Extraction

You might encounter various challenges during scraping.

Websites can change their layout, breaking your scraper.

Anti-scraping measures like CAPTCHAs or IP blocks are common.

Regularly update your scraper and use proxies to overcome these hurdles.

Ensuring Data Quality and Accuracy

Raw extracted data may not always be clean.

You need to validate and clean the data after extraction.

Remove duplicates, correct formatting errors, and fill in missing values.

High-quality data leads to more reliable insights and better decisions.

Ethical Considerations and Best Practices for Website Scraping

Website scraping comes with important responsibilities.

Always prioritize ethical behavior and legal compliance.

Ignoring these aspects can lead to serious consequences.

Protect both yourself and the websites you interact with.

Adhering to Legal and Ethical Guidelines

Data scraping operates in a complex legal landscape.

Laws like GDPR and CCPA govern data privacy and usage.

Always check if the data you extract is publicly available or protected.

Consult legal advice if you are unsure about specific data types or uses.

Respecting Website Terms of Service

Most websites have a "Terms of Service" or "Robots.txt" file.

These files outline rules for accessing and using their content.

Always review these documents before you start scraping.

Respecting these terms helps maintain a positive web ecosystem.

Avoiding IP Blocks and Maintaining Anonymity

Websites often block IPs that send too many requests too quickly.

Use delays between requests to mimic human browsing behavior.

Employ proxy servers and VPNs to rotate your IP address.

This strategy helps you avoid detection and ensures continuous access.

The Future of Data Extraction with Advanced Tools

The field of data extraction is constantly evolving.

New technologies are making scraping more powerful and efficient.

These advancements promise even greater capabilities for data collection.

Staying informed about these trends is crucial for long-term success.

AI and Machine Learning in Website Scraping

Artificial intelligence and machine learning are transforming scraping.

AI can help identify data patterns on complex, dynamic websites.

Machine learning models can adapt to website changes automatically.

This reduces the need for constant manual scraper adjustments.

Emerging Trends in Scraping Tool Development

We are seeing more intelligent and user-friendly tools.

Cloud-based platforms offer scalability and ease of deployment.

Integration with other data analysis tools is also becoming common.

Expect more specialized solutions for niche data extraction needs.

Conclusion: Maximizing Your Data Extraction Potential

Mastering a website scraper opens up a world of data possibilities.

From market research to lead generation, the applications are vast.

Always choose the right tool and adhere to ethical guidelines.

By doing so, you can unlock significant value from the web's immense data.

What kind of data can you extract with a website scraper?

A website scraper can gather a wide range of publicly available information.

You can collect product details, pricing, and customer reviews from e-commerce sites.

It also helps you get contact information, news articles, and job postings.

Here are some common types of data you can extract:

| Data Type | Example Use Case | Benefit |

|---|---|---|

| Product Prices & Details | Competitive pricing analysis | Optimize your pricing strategy |

| Customer Reviews & Ratings | Sentiment analysis, product improvement | Understand customer needs better |

| Contact Information | Lead generation, market research | Expand your sales outreach |

| News Articles & Press Releases | Industry trend monitoring | Stay informed on market changes |

| Real Estate Listings | Market analysis, investment opportunities | Identify valuable property trends |

How can I choose the best website scraping software for my specific needs?

Choosing the best website scraping software depends on your project's complexity and your technical skills.

Consider if you need to handle dynamic content or frequent website changes.

Think about the volume of data you plan to extract regularly.

For professional lead generation, a specialized tool like Scrupp offers robust features and ease of use.

Here are key factors to consider:

- Ease of use: Look for user-friendly interfaces, especially if you are new to scraping.

- Scalability: Ensure the tool can handle large volumes of data and frequent runs.

- Features: Check for proxy support, scheduling, and various export formats.

- Support: Good customer support can be vital when you encounter issues.

- Cost: Evaluate pricing models against your budget and expected ROI.

How do you handle websites that block scrapers or have CAPTCHAs?

Websites use various techniques to prevent automated scraping, like IP blocking or CAPTCHAs.

To avoid blocks, use a rotating pool of proxy IP addresses.

You should also set delays between your requests to mimic human browsing behavior.

For CAPTCHAs, some advanced tools offer built-in solvers or integrate with third-party CAPTCHA-solving services.

Can I use a free website scraper for professional tasks?

A free website scraper can be useful for learning or for very small, one-time projects.

However, they often come with significant limitations for professional use.

These limitations include slower speeds, restricted data volumes, and fewer advanced features.

For reliable, large-scale, or continuous data extraction, investing in a paid solution is usually necessary.

| Feature | Free Scraper | Paid Scraper |

|---|---|---|

| Data Volume | Limited | High / Unlimited |

| Speed | Slower | Faster |

| Advanced Features | Basic / None | Proxy support, scheduling, CAPTCHA solving |

| Support | Community / None | Dedicated customer support |

| Reliability | Variable | High |

How does a scraping tool like Scrupp enhance lead generation and sales efforts?

A scraping tool like Scrupp is specifically designed to boost your sales and marketing.

It integrates seamlessly with platforms such as LinkedIn and Apollo.io.

Scrupp helps you extract verified email addresses and comprehensive professional data.

This allows your sales team to build targeted lead lists efficiently and enrich existing CRM data. Explore Scrupp's lead generation capabilities.

What are the ethical considerations when using a website scraper?

It is crucial to follow ethical and legal guidelines when you use a website scraper.

Always review a website's "Robots.txt" file and "Terms of Service" before scraping.

Avoid scraping personal or sensitive data that is not publicly available or protected by privacy laws like GDPR or CCPA.

Scrape responsibly by not overloading servers with too many requests, which can harm website performance.

How useful was this post?

Click on a star to rate it!

Export Leads from

Sales Navigator, Apollo, Linkedin