Content

Build a Powerful Scraper Website: Your Guide to Web Data

Welcome to the world of web data extraction.

This guide will show you how to build a powerful **scraper website**.

You will learn about tools, legal aspects, and how to use data for business success.

Let's start your journey into automated data collection and insights.

Understanding Web Scraping: What is a Scraper Website?

Web scraping is a powerful technique.

It helps you gather information from the internet automatically.

Understanding its basics is your first step toward mastery.

Let's explore what it truly means to create a data collection system.

What is Web Scraping? A Quick Overview

Web scraping is the automated process of collecting structured data from websites.

Think of it like copying specific information, but much faster and at scale.

It allows you to get precise data points from many web pages quickly.

This collected data is often publicly available information.

Defining a Scraper Website: More Than Just a Script

A **scraper website** is an automated system designed for continuous data collection.

It does more than just run a single script one time.

This system continuously collects, processes, and stores web data over time.

A well-built system can run 24/7, keeping your collected data fresh and updated.

The Core Components of a Data Scraper

Every effective **data scraper** has several key parts working together.

First, it needs a way to make web requests to target websites.

Next, it requires a parser to read and understand the website's HTML code.

Finally, it needs a robust system to store the extracted information for later use.

Why Build a Scraper Website? Key Use Cases & Benefits

Building a **scraper website** offers many significant advantages for businesses.

It helps companies make smarter, data-driven decisions every day.

You can gain valuable insights that were once difficult or impossible to obtain.

Let's look at some key ways you can leverage this powerful technology.

Powering Lead Generation Websites with Scraped Data

Scraped data can completely transform a **lead generation website**.

You can find accurate contact details and company information for potential customers.

This helps you target your sales and marketing efforts more precisely and effectively.

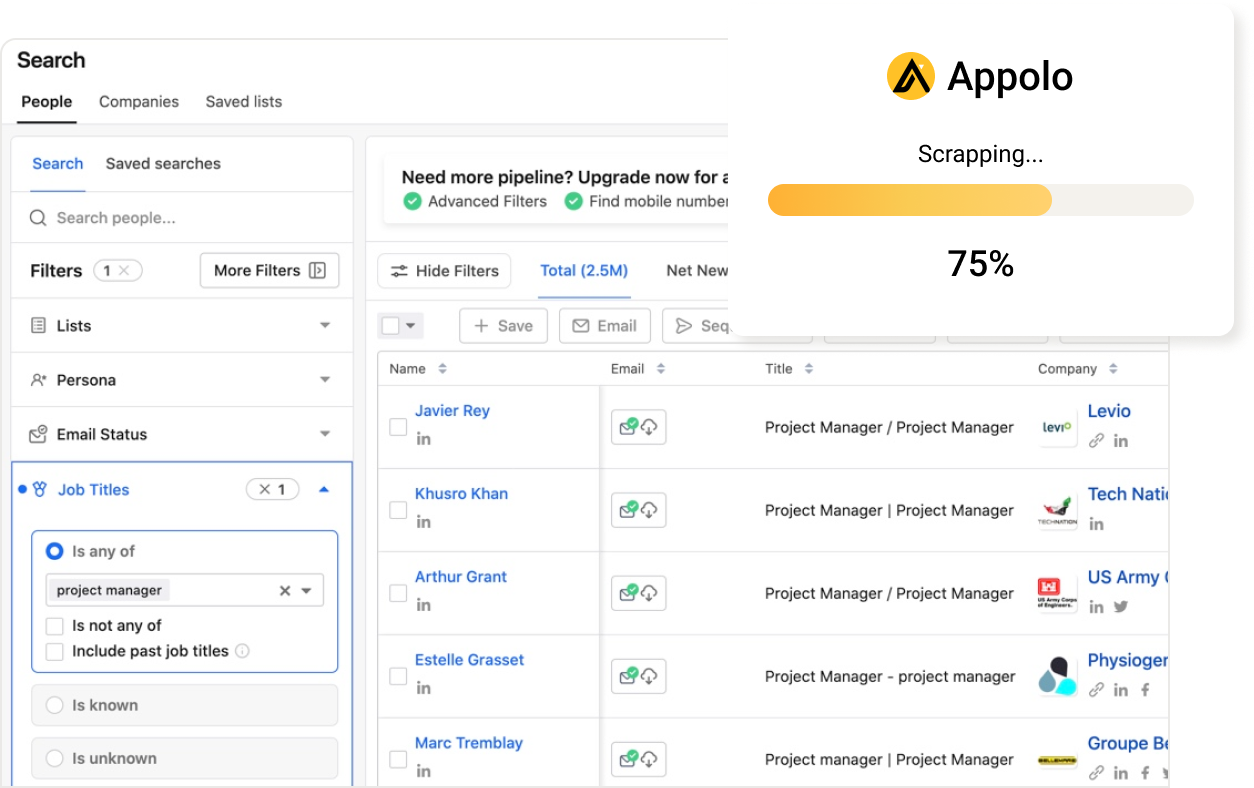

For example, platforms like PhantomBuster and Apify offer robust scraping capabilities to find potential leads, simplifying your outreach process significantly. PhantomBuster provides pre-built automation, while Apify offers a platform for creating and running web scraping and automation tools. You can find more information about PhantomBuster at PhantomBuster and Apify at Apify.

Building Robust Customer Databases (Customer DB) and Market Research

Scraping helps you build and enrich a strong **customer db**.

You can gather public information about your target audience and existing clients.

This data helps you understand market trends, customer needs, and preferences.

It provides a solid foundation for developing informed marketing strategies and product improvements.

Competitor Monitoring and Price Intelligence

Stay ahead of your rivals by continuously monitoring your competitors.

You can track their prices, product changes, new offerings, and promotions in real time.

This intelligence helps you adjust your own business strategies quickly and effectively.

It gives you a crucial competitive edge in today's fast-paced market.

Here is a table showing common uses of scraped data:

| Use Case | Benefit |

|---|---|

| Lead Generation | Find new prospects and contact information efficiently. |

| Market Research | Understand industry trends and customer behavior patterns. |

| Competitor Analysis | Track pricing, products, and strategies of rivals. |

| Content Aggregation | Collect news or articles for a specific topic or niche. |

Navigating the Landscape: Legal, Ethical, and Technical Considerations

Building a **scraper website** involves important legal and ethical rules.

You must understand these boundaries to avoid potential problems.

Ignoring these considerations can lead to serious legal or reputational issues.

Always scrape responsibly and within legal guidelines.

Respecting Robots.txt and Website Terms of Service

Always check a website's **robots.txt** file before scraping.

This file tells you which parts of a site you can or cannot access programmatically.

Also, read the website's terms of service carefully and thoroughly.

These documents outline what is permitted and prohibited for data collection.

Data Privacy: GDPR, CCPA, and Responsible Data Scraping

Data privacy laws like GDPR and CCPA are extremely crucial to follow.

They protect individuals' personal information online from misuse.

Only scrape publicly available data, and never private or sensitive information.

For instance, while you might publicly search `how can i find out who owns a twitter account` for general information, scraping private user data is strictly prohibited and unethical.

Bypassing Anti-Scraping Measures: Proxies and User-Agents

Many websites use measures to block automated scrapers.

These can include IP blocks, CAPTCHAs, or complex JavaScript challenges.

Using **proxies** can help you rotate IP addresses, making your requests appear from different locations.

Changing your **user-agent** can also make your scraper look more like a regular web browser.

Tools and Technologies for Building Your Scraper Website

Creating a powerful **scraper website** needs the right set of tools.

Many programming languages and specialized libraries can assist you.

Choosing the best options depends on your project's specific requirements.

Let's explore some of the most popular and effective choices available.

Choosing Your Programming Language: Python (Scrapy, Beautiful Soup) vs. JavaScript (Puppeteer)

Python is a very popular and versatile choice for web scraping projects.

Libraries like **Scrapy** and **Beautiful Soup** make data extraction efficient and straightforward.

JavaScript with **Puppeteer** is excellent for dynamic websites that rely heavily on JavaScript.

It can control a real browser, handling complex interactions and single-page applications.

Essential Libraries and Frameworks for a Robust Scraper Website

Here are some key tools you might use to build your scraper:

| Tool | Language | Primary Use |

|---|---|---|

| Scrapy | Python | Full-fledged web crawling and scraping framework |

| Beautiful Soup | Python | HTML/XML parsing library for easy data extraction |

| Requests | Python | Making simple and robust HTTP requests |

| Puppeteer | JavaScript | Headless Chrome browser automation and scraping |

| Cheerio | JavaScript | Fast, flexible, and lean DOM parsing for the server |

Browser Developer Tools: How to See Cookies in Inspect Element

Browser developer tools are incredibly useful for understanding web pages.

They help you inspect elements, network requests, and storage.

You can easily learn `how to see cookies in inspect element` within your browser.

This knowledge is crucial for understanding website sessions and making your **webscrape** more effective and persistent.

A Step-by-Step Guide to Creating Your Own Data Scraper

Building your own functional **data scraper** involves a clear, systematic process.

It begins with careful planning and extends through data storage.

Following a structured approach ensures efficiency and success in your project.

Let's break down the essential steps involved in this exciting process.

Planning Your Webscrape: Identifying Target Data and Structure

Start by identifying exactly what specific data you need to collect.

Look closely at the website's HTML structure to see how the data is organized.

This thorough planning phase saves a significant amount of time later in development.

It ensures you collect only the most relevant and necessary information.

Writing the Code: From Request to Data Extraction

First, your code needs to send an HTTP request to the target website's server.

Then, you parse the HTML content you receive in response.

You use CSS selectors or XPath expressions to pinpoint the exact data elements.

Finally, you extract this information into a clean, usable format like text or numbers.

Storing and Organizing Your Scraped Information

Once extracted, you need to store your valuable data effectively.

Common formats include CSV files, JSON documents, or various types of databases.

Organize your data clearly and consistently for easy access and analysis.

Always clean and structure your data for optimal future use and integration.

Here are common data storage options for scraped data:

| Storage Type | Description | Best For |

|---|---|---|

| CSV/Excel | Simple, text-based files with comma-separated values. | Small to medium datasets, easy sharing and quick analysis. |

| SQL Database (e.g., PostgreSQL, MySQL) | Structured query language databases with defined schemas. | Large, complex, relational datasets requiring strong consistency. |

| NoSQL Database (e.g., MongoDB) | Non-relational databases for flexible data models. | Unstructured or semi-structured data, high scalability needs. |

| JSON Files | Lightweight data-interchange format, human-readable. | Hierarchical data, often used for web APIs and small projects. |

Leveraging Scraped Data for Business Growth & Insights

A well-built **scraper website** can drive significant business growth.

Raw data becomes truly valuable when you analyze and interpret it.

It provides profound insights that inform strategic business decisions.

Let's see how to transform collected data into actionable intelligence and opportunities.

Transforming Raw Data into Actionable Intelligence

The real power of web scraping lies in effective data analysis.

Clean, process, and enrich your scraped data to remove noise.

Look for meaningful patterns, emerging trends, and important anomalies.

This process transforms raw information into actionable intelligence for your team.

Fueling a Lead Generation Website with Targeted Data

Targeted data makes your lead generation efforts much more powerful.

You can personalize your outreach messages based on specific insights.

This leads to significantly higher conversion rates and better sales results.

Platforms like Apollo.io and Lusha specialize in providing refined B2B lead data, allowing you to focus on closing deals rather than time-consuming data collection. Explore their pricing plans at Apollo.io Pricing and Lusha Pricing.

Integrating Data into Your Customer DB or CRM

Integrate your valuable scraped data directly into your CRM system.

This keeps your **customer db** updated, complete, and highly accurate.

A unified data source improves customer service and strengthens relationships.

It also streamlines your sales and marketing workflows for greater efficiency.

Here are some key benefits of using a scraper website for your business:

| Benefit Category | Specific Advantage |

|---|---|

| Efficiency | Automates data collection, saving significant manual effort and time. |

| Accuracy | Reduces human error in data entry and ensures consistent data quality. |

| Insights | Provides real-time market data for informed decision-making. |

| Competitiveness | Enables continuous monitoring of competitors and market trends. |

| Growth | Fuels lead generation and customer relationship management with fresh data. |

Building a powerful **scraper website** can unlock vast opportunities for your business.

It empowers you with continuous, data-driven insights to stay competitive.

Remember to always prioritize ethical and legal practices in your scraping activities.

With the right tools and knowledge, you can master web data extraction and leverage its full potential.

Frequently Asked Questions About Scraper Websites

What is a Scraper Website and How Does it Help Businesses?

A scraper website is an automated system that collects data from the internet continuously.

It goes beyond simple scripts, working 24/7 to gather and update information for your business.

This powerful scraper website helps by providing fresh data for market research and competitor analysis.

It can also power a lead generation website by finding new potential customers efficiently.

What is a Scraper Website and How Does it Help Businesses?

A scraper website is an automated system that collects data from the internet continuously.

It goes beyond simple scripts, working 24/7 to gather and update information for your business.

This powerful scraper website helps by providing fresh data for market research and competitor analysis.

It can also power a lead generation website by finding new potential customers efficiently, much like Scrupp helps businesses find B2B leads.

How Can I Use a Webscrape to Improve My Customer Database?

You can use a webscrape to enrich your existing customer db with public information.

This helps you understand your customers better, like their preferences or market trends.

For example, you might find publicly listed company roles or industry news related to your clients.

This data makes your marketing efforts more targeted and effective, boosting customer relationships.

What Are the Legal and Ethical Rules for a Data Scraper?

When building a data scraper, you must follow important legal and ethical rules.

Always check a website's `robots.txt` file, which tells you what you can scrape.

Also, carefully read the website's terms of service to understand their rules.

Responsible data collection is key for any effective data scraper operation, as discussed in detail under Navigating the Landscape.

- Respect `robots.txt` directives.

- Comply with website terms of service.

- Adhere to data privacy laws like GDPR and CCPA.

- Only collect publicly available information.

Can a Scraper Website Help with Social Media Information?

A scraper website can gather publicly available social media data, like public posts or profiles.

However, you must be very careful about privacy and platform terms of service.

For example, if you wonder `how can i find out who owns a twitter account`, you can only access public information.

Scraping private user data is generally against the rules and often illegal.

What Technical Steps Help a Webscrape Avoid Being Blocked?

To prevent your webscrape from being blocked, you can use several technical methods.

One common way is to use **proxies**, which hide your original IP address by routing requests through different servers.

Another helpful tip is to change your **user-agent** string to mimic a regular web browser.

A successful webscrape often depends on cleverly bypassing anti-scraping measures like CAPTCHAs and IP blocks, as outlined in the Bypassing Anti-Scraping Measures section.

How Does a Data Scraper Help with Competitor Monitoring?

A data scraper helps you keep a close eye on your competitors automatically.

It can track their product prices, new offerings, and promotional campaigns in real time.

This constant monitoring gives you up-to-date insights into their market strategies.

You can then adjust your own business plans quickly to stay competitive and make smart decisions.

What are the Best Ways to Store Data from a Scraper Website?

After your scraper website collects data, you need to store it effectively for future use.

For smaller projects, simple CSV or JSON files work well and are easy to manage.

For larger, more complex datasets, consider using a database like SQL (e.g., PostgreSQL) or NoSQL (e.g., MongoDB).

Organizing your data properly ensures it is ready for analysis and integration into your customer db or CRM systems.

How useful was this post?

Click on a star to rate it!

Export Leads from

Sales Navigator, Apollo, Linkedin